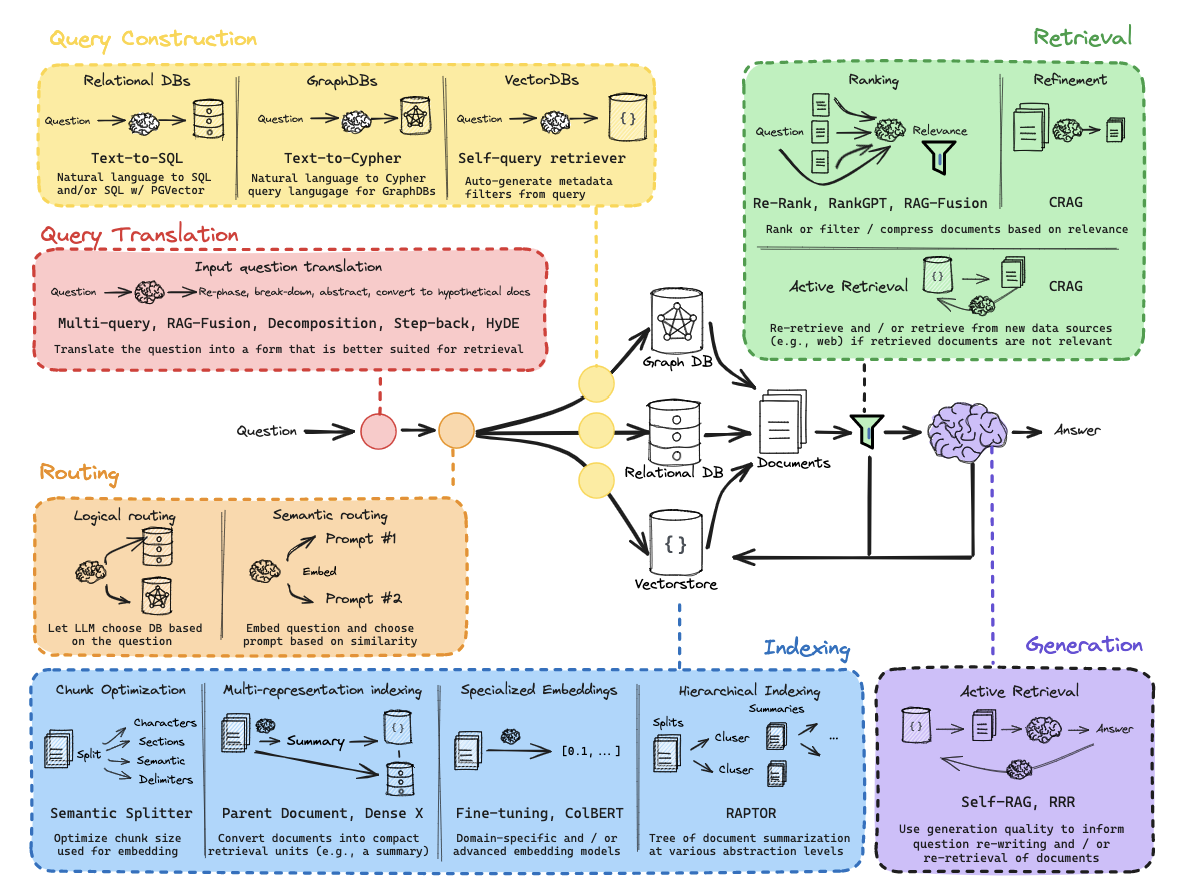

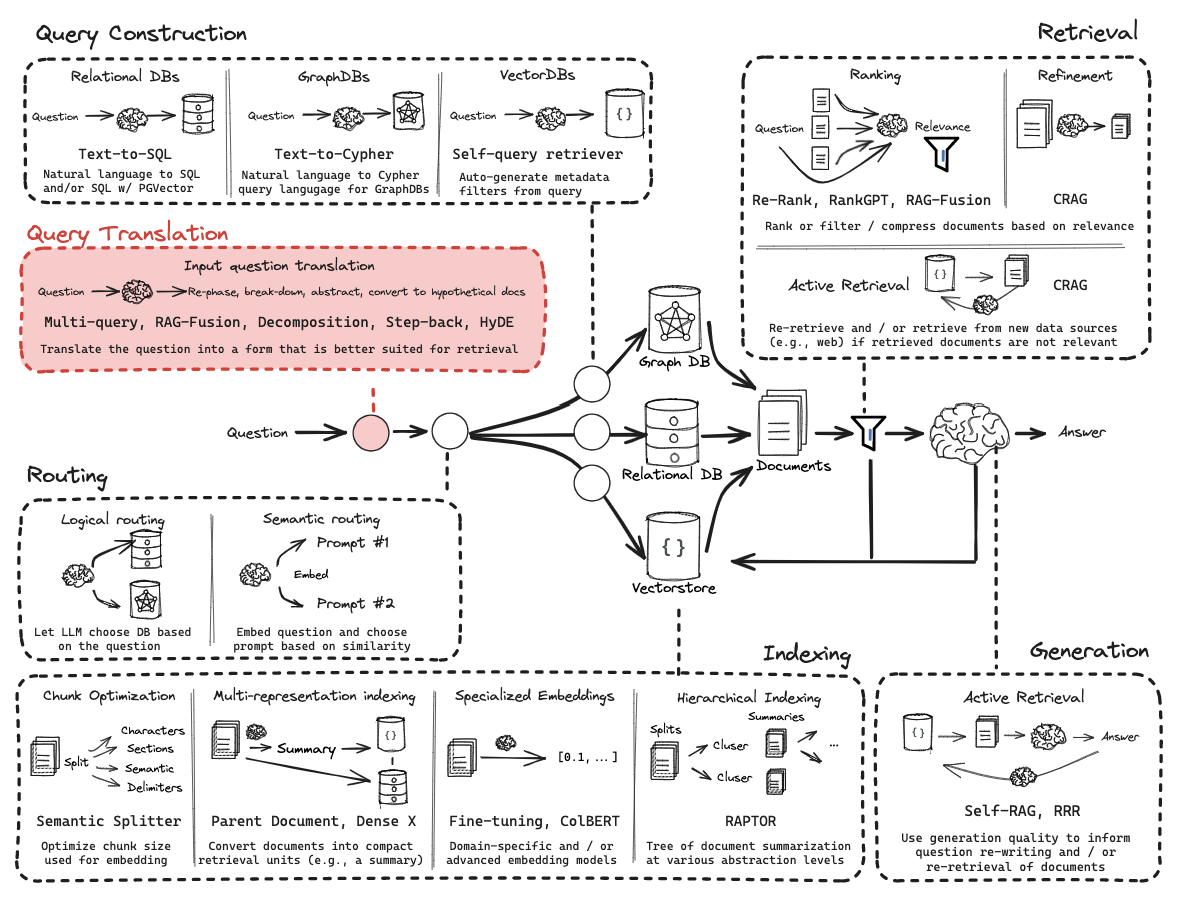

一切都要从这张图开始说起,这是RAG的经典图

涵盖了Question->Translation->Routing->Construction->DB(VectorStore)->Indexing->Documents->Retrieval->Generation->Answer

今天我们就来一起学习、拆解这张图,经过这次的学习,你会对RAG有深刻的理解。

参考资料

https://youtube.com/playlist?list=PLfaIDFEXuae2LXbO1_PKyVJiQ23ZztA0x&feature=shared

https://github.com/langchain-ai/rag-from-scratch/blob/main/rag_from_scratch_1_to_4.ipynb

RAG的整个流程 Overview

1import bs4 2from langchain import hub 3from langchain.text_splitter import RecursiveCharacterTextSplitter 4from langchain_community.document_loaders import WebBaseLoader 5from langchain_community.vectorstores import Chroma 6from langchain_core.output_parsers import StrOutputParser 7from langchain_core.runnables import RunnablePassthrough 8from langchain_openai import ChatOpenAI, OpenAIEmbeddings 9 10#### INDEXING #### 11 12# 加载文档 13loader = WebBaseLoader( 14 web_paths=("https://lilianweng.github.io/posts/2023-06-23-agent/",), 15 bs_kwargs=dict( 16 parse_only=bs4.SoupStrainer( 17 class_=("post-content", "post-title", "post-header") 18 ) 19 ), 20) 21docs = loader.load() 22 23# 切分 24text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=200) 25splits = text_splitter.split_documents(docs) 26 27# 向量化 28vectorstore = Chroma.from_documents(documents=splits, 29 embedding=OpenAIEmbeddings()) 30 31retriever = vectorstore.as_retriever() 32 33#### RETRIEVAL and GENERATION #### 34 35# 提示词 36prompt = hub.pull("rlm/rag-prompt") 37 38# 大模型 39llm = ChatOpenAI(model_name="gpt-3.5-turbo", temperature=0) 40 41# Post-processing 42def format_docs(docs): 43 return "\n\n".join(doc.page_content for doc in docs) 44 45# 链 46rag_chain = ( 47 {"context": retriever | format_docs, "question": RunnablePassthrough()} 48 | prompt 49 | llm 50 | StrOutputParser() 51) 52 53# 问题 -> 答案 54rag_chain.invoke("What is Task Decomposition?")

LangChain确实挺好的,简单的几行代码就完成了整个RAG的过程。

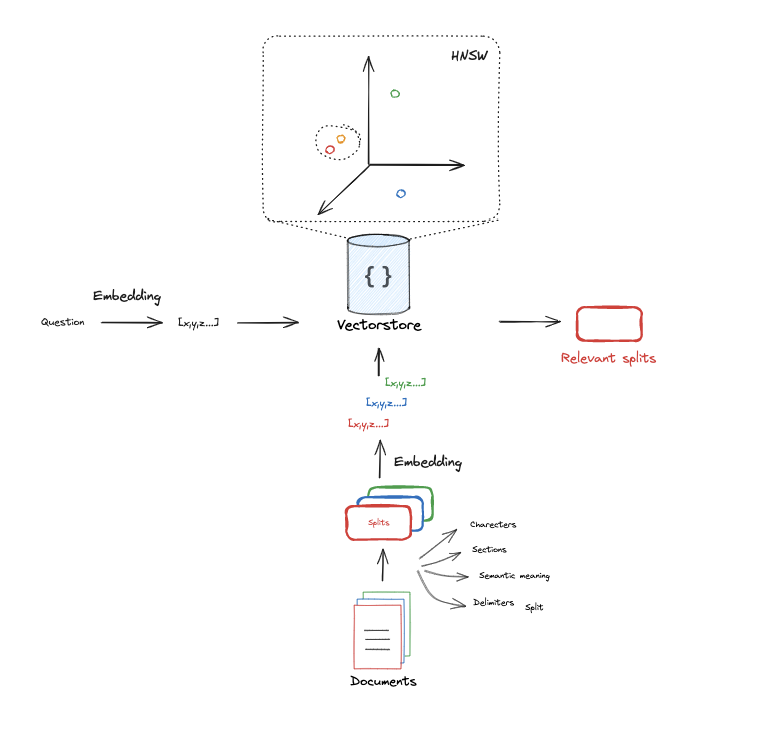

索引 Indexing

探讨一下文档切分向量化和问题向量化的过程,然后通过HNSW算法找到相似数据

参考资料:

Count tokens considering [~4 char / token

[Text embedding models](https://help.openai.com/en/articles/4936856-what-are-tokens-and-how-to-count-them)

1# 问题和文档 2question = "What kinds of pets do I like?" 3document = "My favorite pet is a cat." 4 5import tiktoken 6 7def num_tokens_from_string(string: str, encoding_name: str) -> int: 8 """Returns the number of tokens in a text string.""" 9 encoding = tiktoken.get_encoding(encoding_name) 10 num_tokens = len(encoding.encode(string)) 11 return num_tokens 12# 计算token 13num_tokens_from_string(question, "cl100k_base") 14 15# 通过openai进行向量化Embedding 16from langchain_openai import OpenAIEmbeddings 17embd = OpenAIEmbeddings() 18query_result = embd.embed_query(question) 19document_result = embd.embed_query(document) 20len(query_result) 21 22import numpy as np 23 24# 余弦相似性算法 25def cosine_similarity(vec1, vec2): 26 dot_product = np.dot(vec1, vec2) 27 norm_vec1 = np.linalg.norm(vec1) 28 norm_vec2 = np.linalg.norm(vec2) 29 return dot_product / (norm_vec1 * norm_vec2) 30 31similarity = cosine_similarity(query_result, document_result) 32print("Cosine Similarity:", similarity) 33 34#### 索引INDEXING #### 35 36# 加载博客 37import bs4 38from langchain_community.document_loaders import WebBaseLoader 39loader = WebBaseLoader( 40 web_paths=("https://lilianweng.github.io/posts/2023-06-23-agent/",), 41 bs_kwargs=dict( 42 parse_only=bs4.SoupStrainer( 43 class_=("post-content", "post-title", "post-header") 44 ) 45 ), 46) 47blog_docs = loader.load() 48 49# 切分 50from langchain.text_splitter import RecursiveCharacterTextSplitter 51text_splitter = RecursiveCharacterTextSplitter.from_tiktoken_encoder( 52 chunk_size=300, 53 chunk_overlap=50) 54 55# Make splits 56splits = text_splitter.split_documents(blog_docs) 57 58# 索引Index 59from langchain_openai import OpenAIEmbeddings 60from langchain_community.vectorstores import Chroma 61vectorstore = Chroma.from_documents(documents=splits, 62 embedding=OpenAIEmbeddings()) 63 64retriever = vectorstore.as_retriever()

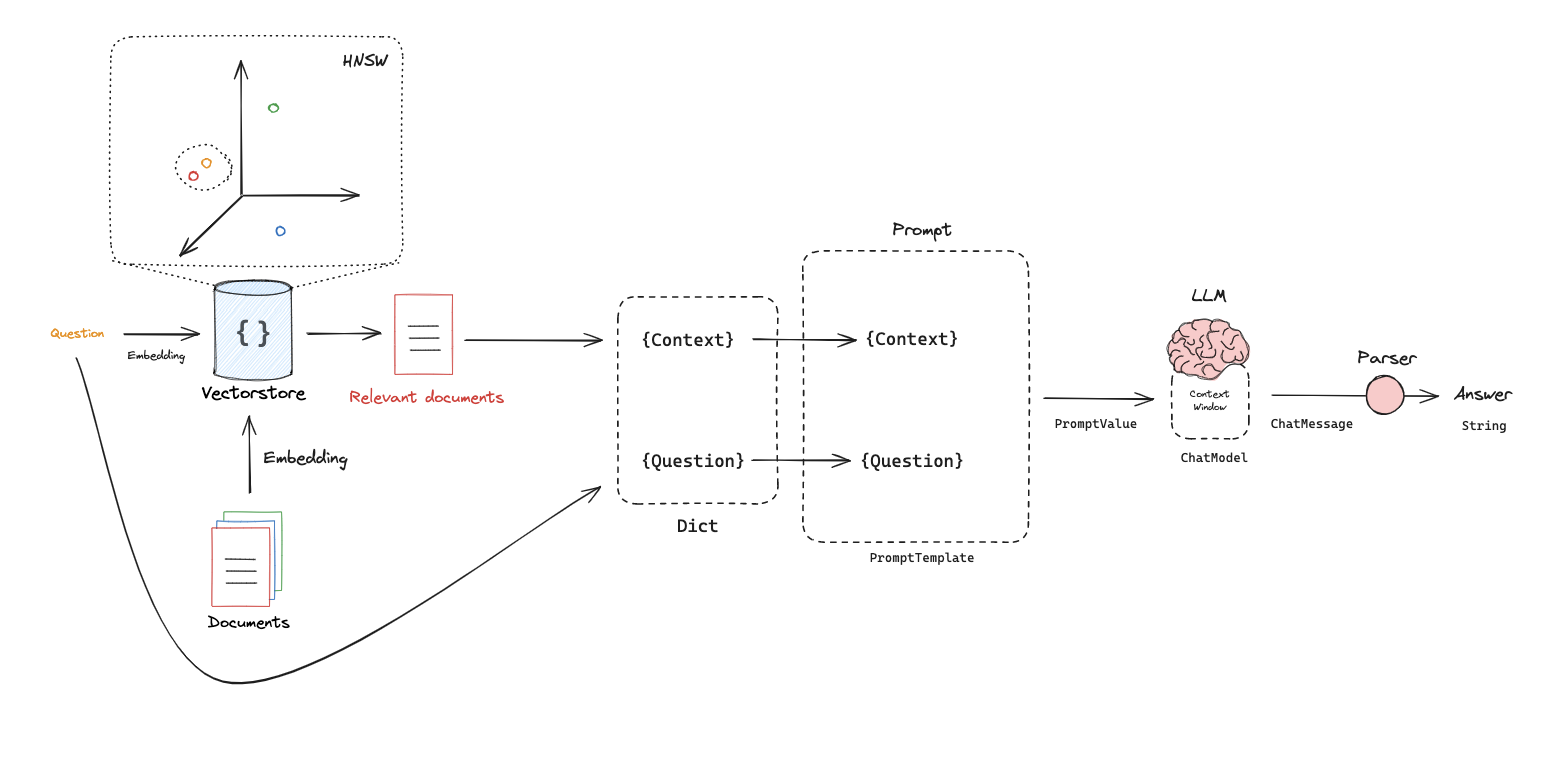

检索 Retrieval

1# Index 2from langchain_openai import OpenAIEmbeddings 3from langchain_community.vectorstores import Chroma 4vectorstore = Chroma.from_documents(documents=splits, 5 embedding=OpenAIEmbeddings()) 6 7 8retriever = vectorstore.as_retriever(search_kwargs={"k": 1}) 9docs = retriever.get_relevant_documents("What is Task Decomposition?") 10len(docs)

生成 Generation

1from langchain_openai import ChatOpenAI 2from langchain.prompts import ChatPromptTemplate 3 4# 提示词 5template = """Answer the question based only on the following context: 6{context} 7 8Question: {question} 9""" 10 11prompt = ChatPromptTemplate.from_template(template) 12prompt 13 14# 大模型 15llm = ChatOpenAI(model_name="gpt-3.5-turbo", temperature=0) 16 17# 链 18chain = prompt | llm 19 20# 运行 21chain.invoke({"context":docs,"question":"What is Task Decomposition?"}) 22 23from langchain import hub 24prompt_hub_rag = hub.pull("rlm/rag-prompt") 25 26prompt_hub_rag 27 28# RAG链 29from langchain_core.output_parsers import StrOutputParser 30from langchain_core.runnables import RunnablePassthrough 31 32rag_chain = ( 33 {"context": retriever, "question": RunnablePassthrough()} 34 | prompt 35 | llm 36 | StrOutputParser() 37) 38 39rag_chain.invoke("What is Task Decomposition?") 40

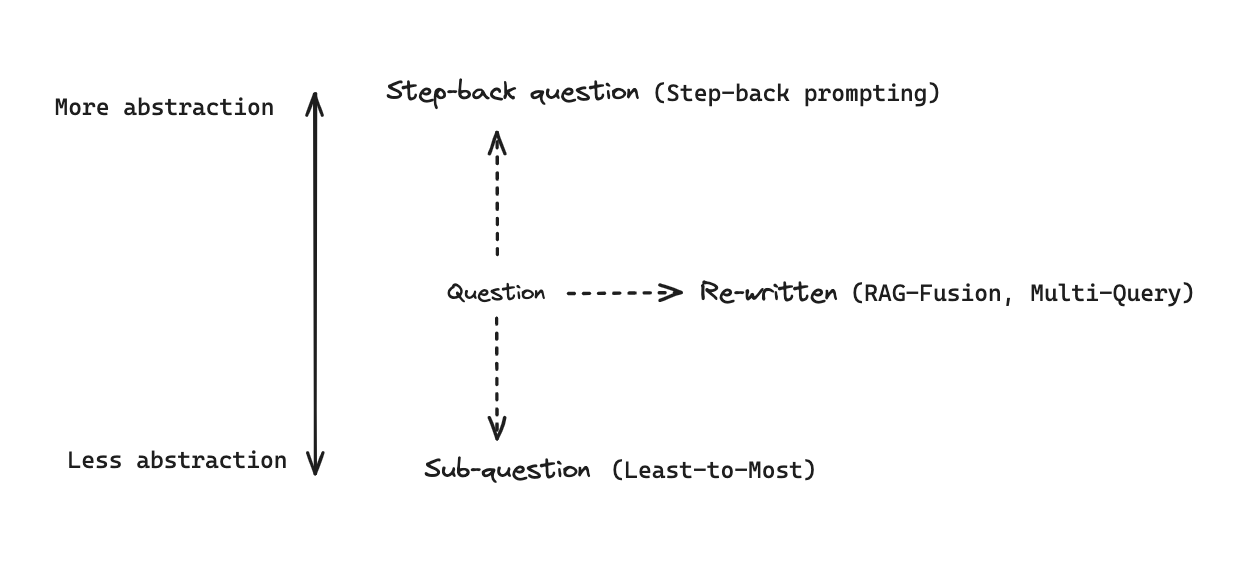

Query Translation

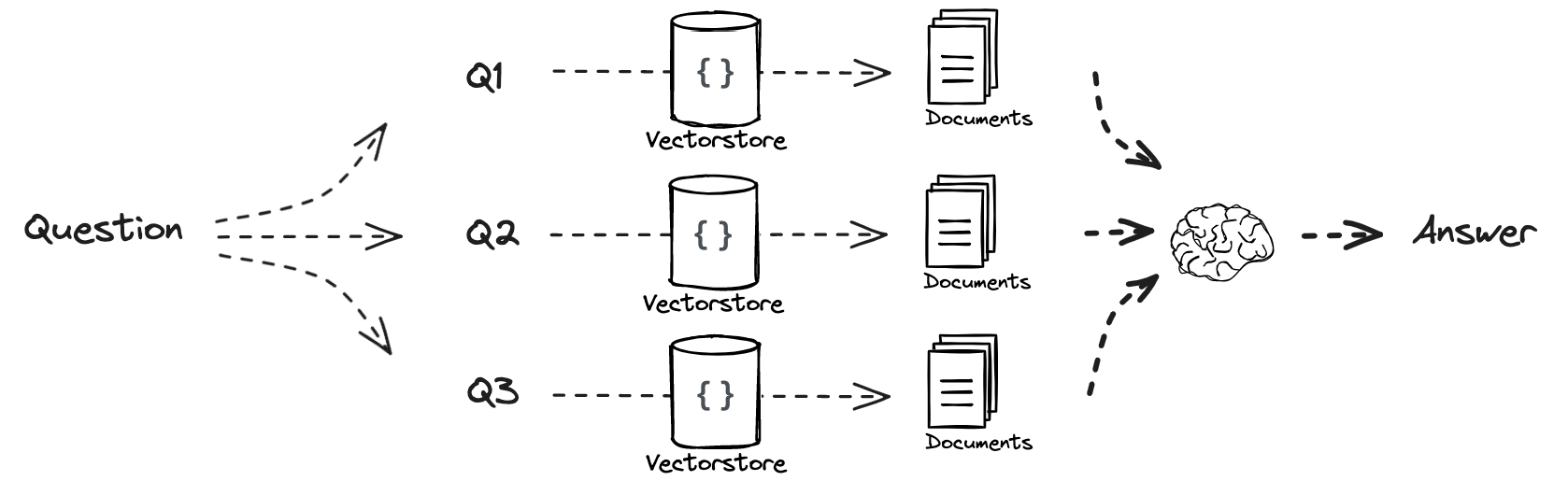

Multi Query

把原本的一个问题 变成 多个问题

参考资料

1#### 索引INDEXING #### 2 3# 抓取博客 4import bs4 5from langchain_community.document_loaders import WebBaseLoader 6loader = WebBaseLoader( 7 web_paths=("https://lilianweng.github.io/posts/2023-06-23-agent/",), 8 bs_kwargs=dict( 9 parse_only=bs4.SoupStrainer( 10 class_=("post-content", "post-title", "post-header") 11 ) 12 ), 13) 14blog_docs = loader.load() 15 16# 切分 17from langchain.text_splitter import RecursiveCharacterTextSplitter 18text_splitter = RecursiveCharacterTextSplitter.from_tiktoken_encoder( 19 chunk_size=300, 20 chunk_overlap=50) 21 22# Make splits 23splits = text_splitter.split_documents(blog_docs) 24 25# 索引 Index 26from langchain_openai import OpenAIEmbeddings 27from langchain_community.vectorstores import Chroma 28vectorstore = Chroma.from_documents(documents=splits, 29 embedding=OpenAIEmbeddings()) 30 31retriever = vectorstore.as_retriever() 32 33 34#### Prompt###### 35from langchain.prompts import ChatPromptTemplate 36 37# Multi Query: Different Perspectives 38# 基于大语言模型把一个问题生产多个问题 39template = """You are an AI language model assistant. Your task is to generate five 40different versions of the given user question to retrieve relevant documents from a vector 41database. By generating multiple perspectives on the user question, your goal is to help 42the user overcome some of the limitations of the distance-based similarity search. 43Provide these alternative questions separated by newlines. Original question: {question}""" 44prompt_perspectives = ChatPromptTemplate.from_template(template) 45 46from langchain_core.output_parsers import StrOutputParser 47from langchain_openai import ChatOpenAI 48 49generate_queries = ( 50 prompt_perspectives 51 | ChatOpenAI(temperature=0) 52 | StrOutputParser() 53 | (lambda x: x.split("\n")) 54) 55 56from langchain.load import dumps, loads 57 58# 合并 唯一化 59def get_unique_union(documents: list[list]): 60 """ Unique union of retrieved docs """ 61 # Flatten list of lists, and convert each Document to string 62 flattened_docs = [dumps(doc) for sublist in documents for doc in sublist] 63 # Get unique documents 64 unique_docs = list(set(flattened_docs)) 65 # Return 66 return [loads(doc) for doc in unique_docs] 67 68# 检索 Retrieve 69question = "What is task decomposition for LLM agents?" 70retrieval_chain = generate_queries | retriever.map() | get_unique_union 71docs = retrieval_chain.invoke({"question":question}) 72len(docs) 73 74 75from operator import itemgetter 76from langchain_openai import ChatOpenAI 77from langchain_core.runnables import RunnablePassthrough 78 79# RAG 80template = """Answer the following question based on this context: 81 82{context} 83 84Question: {question} 85""" 86 87prompt = ChatPromptTemplate.from_template(template) 88 89llm = ChatOpenAI(temperature=0) 90 91final_rag_chain = ( 92 {"context": retrieval_chain, 93 "question": itemgetter("question")} 94 | prompt 95 | llm 96 | StrOutputParser() 97) 98 99final_rag_chain.invoke({"question":question})

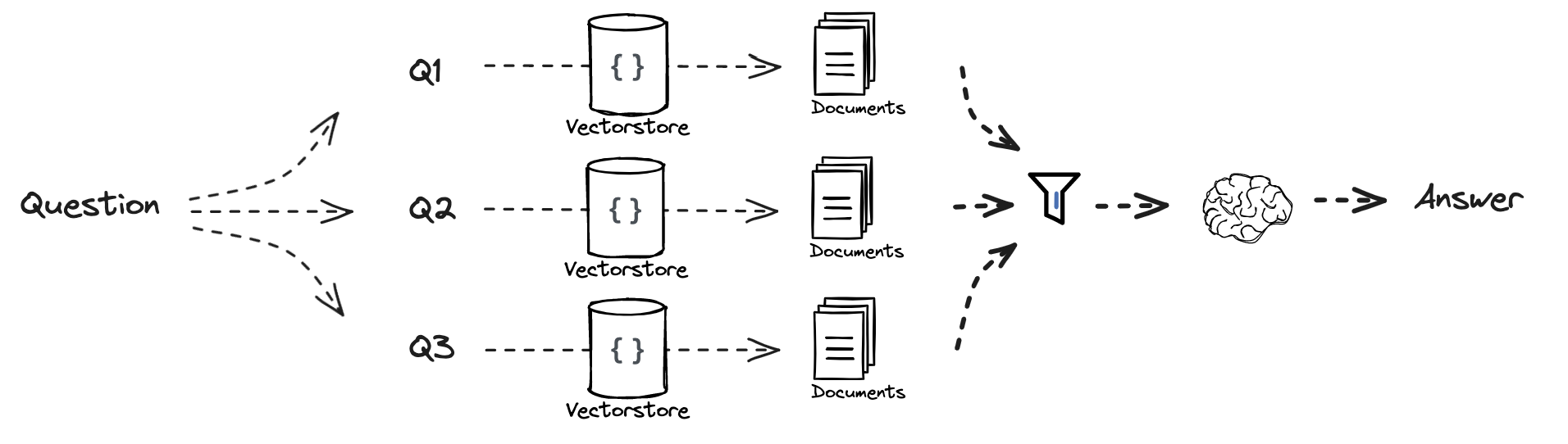

RAG融合RAG-Fusion

把多个问题获得的答案进行融合处理

参考资料

Docs:

Blog / repo:

1from langchain.prompts import ChatPromptTemplate 2 3# RAG-Fusion: Related 4template = """You are a helpful assistant that generates multiple search queries based on a single input query. \n 5Generate multiple search queries related to: {question} \n 6Output (4 queries):""" 7prompt_rag_fusion = ChatPromptTemplate.from_template(template) 8 9from langchain_core.output_parsers import StrOutputParser 10from langchain_openai import ChatOpenAI 11 12generate_queries = ( 13 prompt_rag_fusion 14 | ChatOpenAI(temperature=0) 15 | StrOutputParser() 16 | (lambda x: x.split("\n")) 17) 18 19from langchain.load import dumps, loads 20 21def reciprocal_rank_fusion(results: list[list], k=60): 22 """ Reciprocal_rank_fusion that takes multiple lists of ranked documents 23 and an optional parameter k used in the RRF formula """ 24 25 # 初始化一个字典来保存每个唯一文档的融合分数 26 fused_scores = {} 27 28 # 迭代每个排序文档列表 29 for docs in results: 30 # 遍历列表中的每个文档及其排名(列表中的位置) 31 for rank, doc in enumerate(docs): 32 # 将文档转换为字符串格式以用作键(假设文档可以序列化为 JSON) 33 doc_str = dumps(doc) 34 # 如果文档尚未添加到 fused_scores 词典中,则将其添加为初始分数 0 35 if doc_str not in fused_scores: 36 fused_scores[doc_str] = 0 37 # 检索文档的当前分数(如果有) 38 previous_score = fused_scores[doc_str] 39 # 使用RRF公式更新文档的分数:1 /(rank + k) 40 fused_scores[doc_str] += 1 / (rank + k) 41 42 # 根据融合得分按降序对文档进行排序,以获得最终的重新排序结果 43 reranked_results = [ 44 (loads(doc), score) 45 for doc, score in sorted(fused_scores.items(), key=lambda x: x[1], reverse=True) 46 ] 47 48 # 将重新排序的结果作为元组列表返回,每个元组包含文档及其融合分数 49 return reranked_results 50 51retrieval_chain_rag_fusion = generate_queries | retriever.map() | reciprocal_rank_fusion 52docs = retrieval_chain_rag_fusion.invoke({"question": question}) 53len(docs) 54 55from langchain_core.runnables import RunnablePassthrough 56 57# RAG 58template = """Answer the following question based on this context: 59 60{context} 61 62Question: {question} 63""" 64 65prompt = ChatPromptTemplate.from_template(template) 66 67final_rag_chain = ( 68 {"context": retrieval_chain_rag_fusion, 69 "question": itemgetter("question")} 70 | prompt 71 | llm 72 | StrOutputParser() 73) 74 75final_rag_chain.invoke({"question":question})

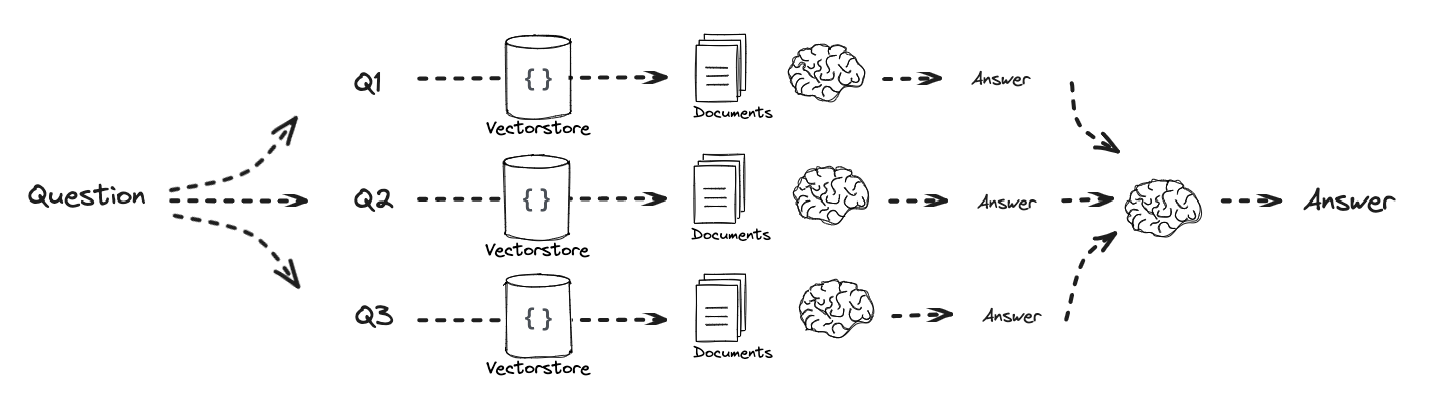

分解Decomposition

生成的每个答案进行排序,并且前一个答案作为后一个的输入

1from langchain.prompts import ChatPromptTemplate 2 3# Decomposition 4template = """You are a helpful assistant that generates multiple sub-questions related to an input question. \n 5The goal is to break down the input into a set of sub-problems / sub-questions that can be answers in isolation. \n 6Generate multiple search queries related to: {question} \n 7Output (3 queries):""" 8prompt_decomposition = ChatPromptTemplate.from_template(template) 9 10from langchain_openai import ChatOpenAI 11from langchain_core.output_parsers import StrOutputParser 12 13# LLM 14llm = ChatOpenAI(temperature=0) 15 16# Chain 17generate_queries_decomposition = ( prompt_decomposition | llm | StrOutputParser() | (lambda x: x.split("\n"))) 18 19# Run 20question = "What are the main components of an LLM-powered autonomous agent system?" 21questions = generate_queries_decomposition.invoke({"question":question}) 22 23# Prompt 24template = """Here is the question you need to answer: 25 26\n --- \n {question} \n --- \n 27 28Here is any available background question + answer pairs: 29 30\n --- \n {q_a_pairs} \n --- \n 31 32Here is additional context relevant to the question: 33 34\n --- \n {context} \n --- \n 35 36Use the above context and any background question + answer pairs to answer the question: \n {question} 37""" 38 39decomposition_prompt = ChatPromptTemplate.from_template(template) 40 41from operator import itemgetter 42from langchain_core.output_parsers import StrOutputParser 43 44def format_qa_pair(question, answer): 45 """Format Q and A pair""" 46 47 formatted_string = "" 48 formatted_string += f"Question: {question}\nAnswer: {answer}\n\n" 49 return formatted_string.strip() 50 51# llm 52llm = ChatOpenAI(model_name="gpt-3.5-turbo", temperature=0) 53 54q_a_pairs = "" 55for q in questions: 56 57 rag_chain = ( 58 {"context": itemgetter("question") | retriever, 59 "question": itemgetter("question"), 60 "q_a_pairs": itemgetter("q_a_pairs")} 61 | decomposition_prompt 62 | llm 63 | StrOutputParser()) 64 65 answer = rag_chain.invoke({"question":q,"q_a_pairs":q_a_pairs}) 66 q_a_pair = format_qa_pair(q,answer) 67 q_a_pairs = q_a_pairs + "\n---\n"+ q_a_pair

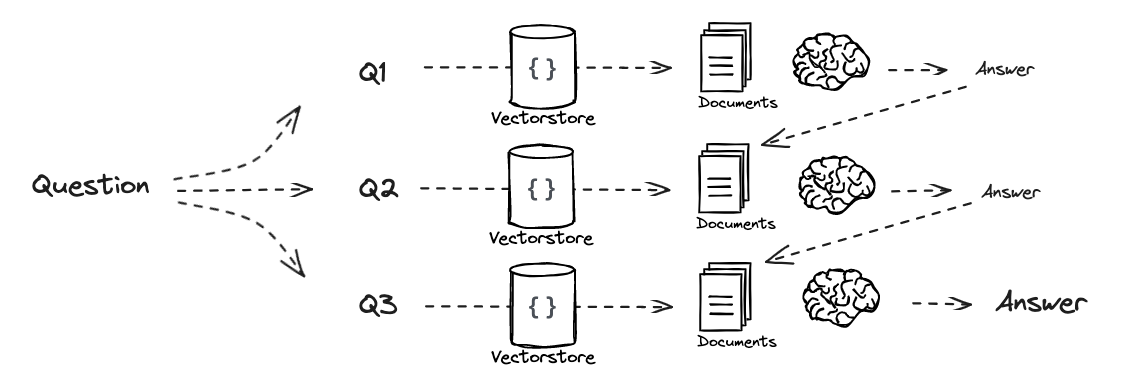

单独回答 Answer individually

1# 分别回答每个子问题 2 3from langchain import hub 4from langchain_core.prompts import ChatPromptTemplate 5from langchain_core.runnables import RunnablePassthrough, RunnableLambda 6from langchain_core.output_parsers import StrOutputParser 7from langchain_openai import ChatOpenAI 8 9# RAG prompt 10prompt_rag = hub.pull("rlm/rag-prompt") 11 12def retrieve_and_rag(question,prompt_rag,sub_question_generator_chain): 13 """RAG on each sub-question""" 14 15 # 使用我们的分解 16 sub_questions = sub_question_generator_chain.invoke({"question":question}) 17 18 # 初始化列表以保存 RAG 链结果 19 rag_results = [] 20 21 for sub_question in sub_questions: 22 23 # 检索每个子问题的文档 24 retrieved_docs = retriever.get_relevant_documents(sub_question) 25 26 # 在 RAG 链中使用检索到的文档和子问题 27 answer = (prompt_rag | llm | StrOutputParser()).invoke({"context": retrieved_docs, 28 "question": sub_question}) 29 rag_results.append(answer) 30 31 return rag_results,sub_questions 32 33# 将检索和 RAG 流程包装在 RunnableLambda 中,以便集成到链中 34answers, questions = retrieve_and_rag(question, prompt_rag, generate_queries_decomposition) 35 36 37def format_qa_pairs(questions, answers): 38 """Format Q and A pairs""" 39 40 formatted_string = "" 41 for i, (question, answer) in enumerate(zip(questions, answers), start=1): 42 formatted_string += f"Question {i}: {question}\nAnswer {i}: {answer}\n\n" 43 return formatted_string.strip() 44 45context = format_qa_pairs(questions, answers) 46 47# Prompt 48template = """Here is a set of Q+A pairs: 49 50{context} 51 52Use these to synthesize an answer to the question: {question} 53""" 54 55prompt = ChatPromptTemplate.from_template(template) 56 57final_rag_chain = ( 58 prompt 59 | llm 60 | StrOutputParser() 61) 62 63final_rag_chain.invoke({"context":context,"question":question})

退后 Step Back

1# 几个镜头示例 2from langchain_core.prompts import ChatPromptTemplate, FewShotChatMessagePromptTemplate 3examples = [ 4 { 5 "input": "Could the members of The Police perform lawful arrests?", 6 "output": "what can the members of The Police do?", 7 }, 8 { 9 "input": "Jan Sindel’s was born in what country?", 10 "output": "what is Jan Sindel’s personal history?", 11 }, 12] 13# 我们现在将这些转换为示例消息 14example_prompt = ChatPromptTemplate.from_messages( 15 [ 16 ("human", "{input}"), 17 ("ai", "{output}"), 18 ] 19) 20few_shot_prompt = FewShotChatMessagePromptTemplate( 21 example_prompt=example_prompt, 22 examples=examples, 23) 24prompt = ChatPromptTemplate.from_messages( 25 [ 26 ( 27 "system", 28 """You are an expert at world knowledge. Your task is to step back and paraphrase a question to a more generic step-back question, which is easier to answer. Here are a few examples:""", 29 ), 30 # 一些镜头示例 31 few_shot_prompt, 32 # 新问题 33 ("user", "{question}"), 34 ] 35) 36 37generate_queries_step_back = prompt | ChatOpenAI(temperature=0) | StrOutputParser() 38question = "What is task decomposition for LLM agents?" 39generate_queries_step_back.invoke({"question": question}) 40 41# 响应提示 42response_prompt_template = """You are an expert of world knowledge. I am going to ask you a question. Your response should be comprehensive and not contradicted with the following context if they are relevant. Otherwise, ignore them if they are not relevant. 43 44# {normal_context} 45# {step_back_context} 46 47# Original Question: {question} 48# Answer:""" 49response_prompt = ChatPromptTemplate.from_template(response_prompt_template) 50 51chain = ( 52 { 53 # Retrieve context using the normal question 54 "normal_context": RunnableLambda(lambda x: x["question"]) | retriever, 55 # Retrieve context using the step-back question 56 "step_back_context": generate_queries_step_back | retriever, 57 # Pass on the question 58 "question": lambda x: x["question"], 59 } 60 | response_prompt 61 | ChatOpenAI(temperature=0) 62 | StrOutputParser() 63) 64 65chain.invoke({"question": question}) 66

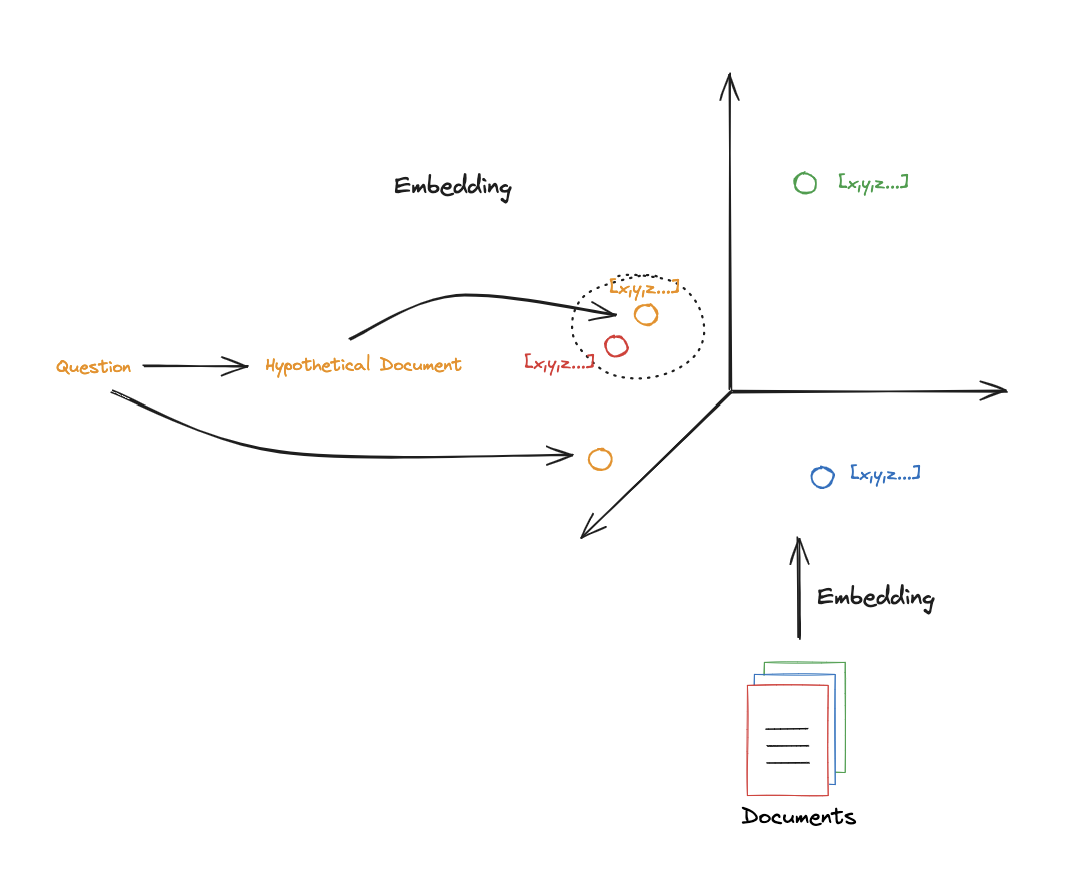

算法 HyDE

1from langchain.prompts import ChatPromptTemplate 2 3# HyDE document genration 4template = """Please write a scientific paper passage to answer the question 5Question: {question} 6Passage:""" 7prompt_hyde = ChatPromptTemplate.from_template(template) 8 9from langchain_core.output_parsers import StrOutputParser 10from langchain_openai import ChatOpenAI 11 12generate_docs_for_retrieval = ( 13 prompt_hyde | ChatOpenAI(temperature=0) | StrOutputParser() 14) 15 16# Run 17question = "What is task decomposition for LLM agents?" 18generate_docs_for_retrieval.invoke({"question":question}) 19 20# Retrieve 21retrieval_chain = generate_docs_for_retrieval | retriever 22retireved_docs = retrieval_chain.invoke({"question":question}) 23retireved_docs 24 25# RAG 26template = """Answer the following question based on this context: 27 28{context} 29 30Question: {question} 31""" 32 33prompt = ChatPromptTemplate.from_template(template) 34 35final_rag_chain = ( 36 prompt 37 | llm 38 | StrOutputParser() 39) 40 41final_rag_chain.invoke({"context":retireved_docs,"question":question}) 42